CUHK

News Centre

CUHK and The Nippon Foundation (Japan) Jointly Launch “SignTown” –

a Sign Language Learning Game Featuring Real-time AI-based Sign Language Recognition developed by Google

SignTown (https://sign.town), a sign language online learning game featuring AI-based sign language recognition to promote understanding of sign language and deaf awareness, was jointly launched in beta by the Centre for Sign Linguistics and Deaf Studies (CSLDS) of the Department of Linguistics and Modern Languages at The Chinese University of Hong Kong (CUHK), and The Nippon Foundation (Japan).

This project was made possible by collaboration between CUHK, The Nippon Foundation, Google, and the Sign Language Research Center of Kwansei Gakuin University. As a leading authority in the linguistic research of sign language in Hong Kong, CSLDS at CUHK has provided academic expertise on developing the sign language recognition model and organised the data collection for Hong Kong Sign Language (HKSL). The Nippon Foundation has funded the project, provided insights into sign language and deaf culture, and co-owned the project management. Meanwhile, Google has developed the original concept and, led the technical exploration and development of the sign language recognition system powered by AI. Kwansei Gakuin University has helped collect training data for Japanese Sign Language (JSL, also known as Shuwa in Japanese) and provided insights into deaf culture in Japan.

With the aim of making improvements based on feedback from users, SignTown is released as a beta version today. The first complete version of SignTown, which is now under development, is targeted to launch on 23 September 2021, the International Day of Sign Language.

Learn Hong Kong and Japanese sign language through interactive games

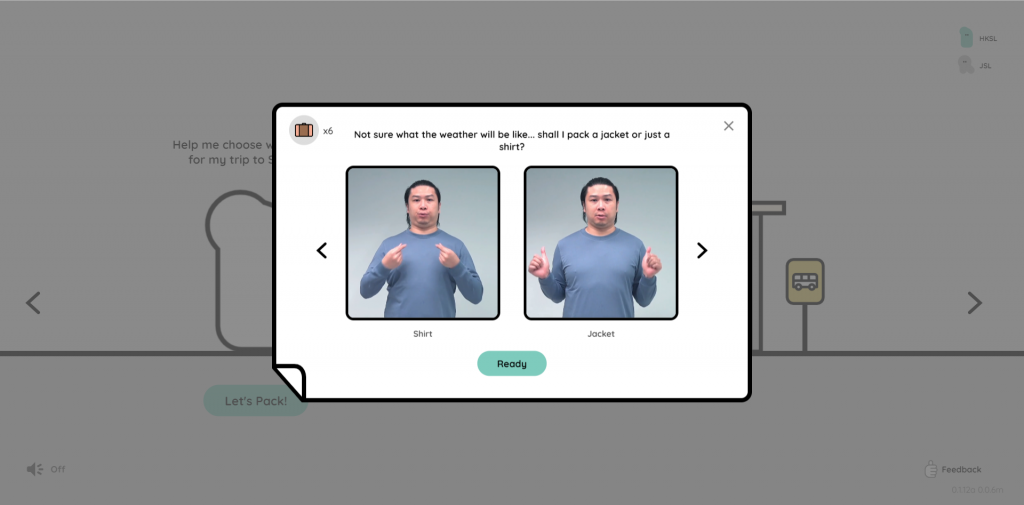

SignTown is a web-based, real-time sign recognition game, where users take control of a cute avatar who is a newcomer to the town in a fictional world where everyone communicates in sign language to get things done. The research team employed an end-to-end open source platform for machine learning, TensorFlowTM, to train the sign language recognition model. It enables users to learn and express themselves in sign language, and to get feedback about their signing simply with a computer and a webcam.

Users can enjoy the experience of signing into HKSL and JSL while being able to switch between the two in the game. Throughout the game, users can collect various items after their signs are correctly recognised. Users can learn signs from various common categories in SignTown, which is designed around various themes, ranging from placing orders in a café to finding accommodation during a trip. SignTown also provides information about deaf culture in various regions.

AI-based recognition helps promote understanding of sign language

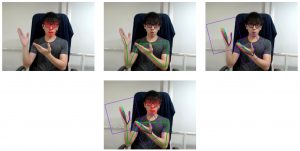

Apart from hand movements and body motions, facial expressions and non-manual features also play important roles in sign language. These important features were not addressed adequately by the past sign language recognition models, which focused mainly on hands and gestures, to produce accurate results on sign recognition. Strict equipment requirements including 3D cameras and digital gloves for capturing three-dimensional sign language movements made it even harder to popularise the technology in this field.

The team used multiple machine learning models to track these significant features without the need for special equipment or specific settings. PoseNet is used for human pose and gesture recognition, Facemesh is used for the mouth and facial expression, and Hands Tracking is used for handshape and finger detection. Furthermore, the sign language data were collected by native deaf signers from Hong Kong and Japan to ensure the accuracy of data.

Professor Gladys Tang, Professor of Lingustics and Director of the Centre for Sign Linguistics and Deaf Studies said, “In 2020, we started Project Shuwa which aims to develop an automatic translation model that can recognise natural sign language conversations and translate them into spoken language. SignTown, the very first step of the project, focuses on the technical barriers in recognising the phonetic features that are essential in sign language expressions. Sign language dictionaries and short sentence recognition will be the next step, and we hope these new technologies can bridge the gap between the deaf and the hearing.”

Google made the source code for the sign language recognition technology that forms the platform of SignTown freely accessible to the public. This makes it possible for developers and researchers around the world to easily develop similar recognition technology for other sign languages. The source code is available at https://github.com/google/shuwa

CSLDS dedicated in promoting the popularisation of sign language

The United Nations Convention on the Rights of Persons with Disabilities, adopted in 2006, clearly states that “Sign Language is a language”. An environment that allows deaf people to use sign language is essential for promoting the participation of the deaf in society. With the advocacy of participation by the deaf, the use of sign language has become more common in recent years. For example, sign interpretation services are provided for news programmes and government announcements. It is a good opportunity to promote sign language as public interest in sign language and deaf culture is growing. Together with the widely promoted use of information and communication technologies, the SignTown, sign language learning game has been developed to promote the understanding of sign language and deaf people through the use of advanced technology.

Established in 2003, CSLDS is the only institution in Asia that offers research and professional training in sign linguistics and deaf education for university students, deaf adults, and professionals like speech therapists, audiologists and social workers. The promotion of sign linguistics, sign interpretation and deaf education in Hong Kong by CSLDS has made a tremendous impact generally on deaf development and education locally and in Asia.

About The Nippon Foundation

Since its establishment in 1962, the Nippon Foundation has been Japan’s largest social-contribution foundation, supporting public-interest projects that transcend both domains and national borders. In order to realise “a society where everyone supports everyone”, the Nippon Foundation is active in domains including those concerned with children, disabilities, disasters, oceans, and international cooperation. https://www.nippon-foundation.or.jp/

About Kwansei Gakuin University

Kwansei Gakuin University, founded in 1889, is a comprehensive university which provides education based on the principles of Christianity. The Sign Language Research Center (SLRC) was established in April 2015 as the first institution of sign language at a Japanese university, and it has been active since 2016, supported by The Nippon Foundation. The SLRC has been contributing to this project by selecting Japanese Sign Language (JSL) words and collecting JSL data for use in machine learning.